Super Resolution – Machine Learning Super Resolution, a field within machine learning and AI. about creating a model that can upscale an image, while filling the space in between, intelligently. A model that yields better results than the basic interpolated algorithm used for texture filtering.

The following will discuss some super-resolution machine learning models. Source code can be found at the end of the post.

Stylized Super Resolution

A Stylized super-resolution model is a machine learning – AI model that has been trained to be good at upscaling stylized/cartoon/anime images, in contrast to a realistic super-resolution model. However, it is probably possible to train a model that is efficient in both art styles. However, with a specific art style, the model could perhaps be smaller, and therefore more efficient for real-time application.

Several super-resolution model architecture with a set of hyperparameters was trained to evaluate what model has the best visual and functional performance for large-scale images.

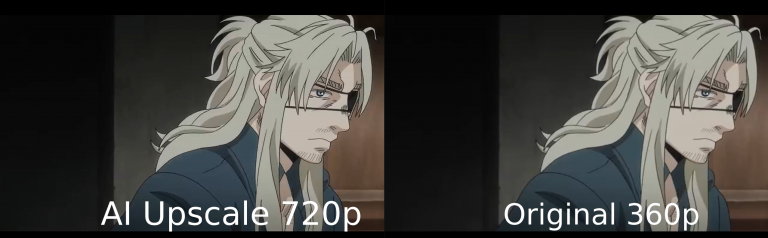

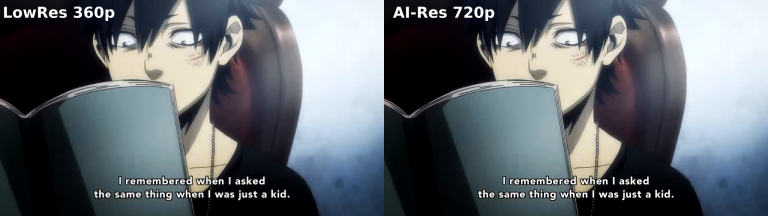

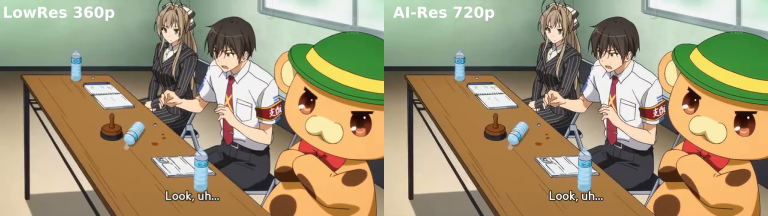

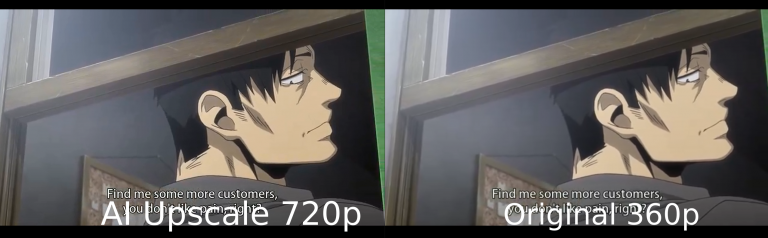

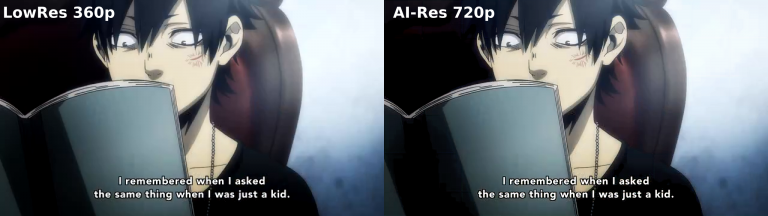

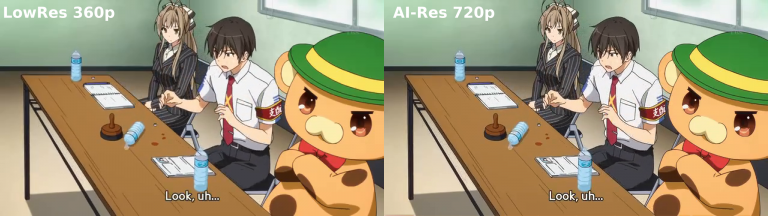

However, during the evaluation process, it became easier to see the differences with low-resolution images than with images already relatively high resolution. By using low-resolution and low-quality video image frames, (360p video). The result could be evaluated visually.

EDSR – Enhanced Deep Residual Networks Super-Resolution

The following are the best model so far trained, EDSR.

VDR – Very Deep Convolutional Network

The second best model so far is the VDR.

Challenges – Seamless Tile Upscaling

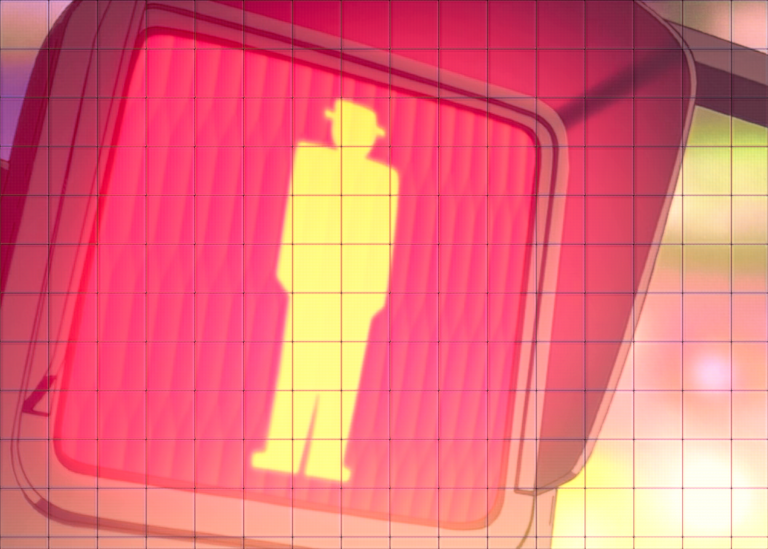

During the experimenting on various suggested architecture models, as well as finding good sets of hyperparameters for yielding good models. One problem was less noticeable at first. That is to say when using the model on an image bigger than its input and output it was trained against.

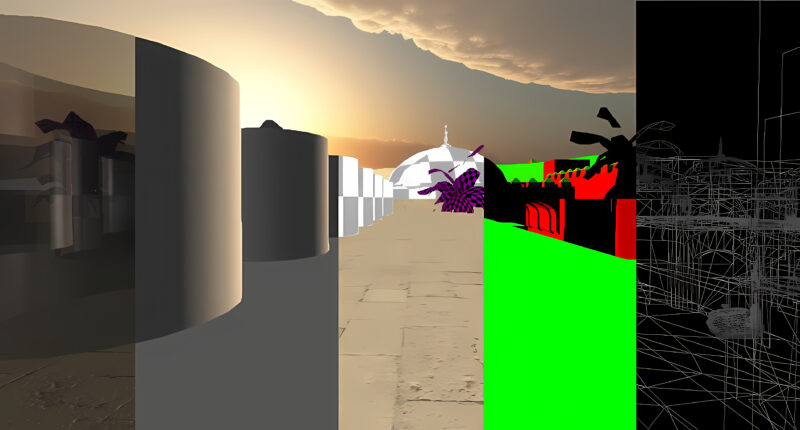

The solution was to tile the upscaling. For some models, it was more successful than others. However, many models showed good results per tile, but they were having problems around the edges. Making it impractical to use to upscale for bigger images than it was trained against. However, the problem was most predominantly in the DCNN and AE-based super-resolution model The EDSR model was effectively not visible, but still present when using image manipulating tools. See the following images for example for tile issues.

Having that issue stated. It should be possible by adding padding around the edges, extending the size of the input image, followed by cropping out the edge. This could potentially remove the issue. However, doing that would add another layer of complexity to the algorithm and implementation. It seems easier to find an alternative model architecture that does not suffer from this problem.

The following video shows the training evolution for EDSR, VDR, AE-SR, DCNN Super resolution models.

Source Code

The source code can be found at the following on GitHub.

Free/Open software developer, Linux user, Graphic C/C++ software developer, network & hardware enthusiast.