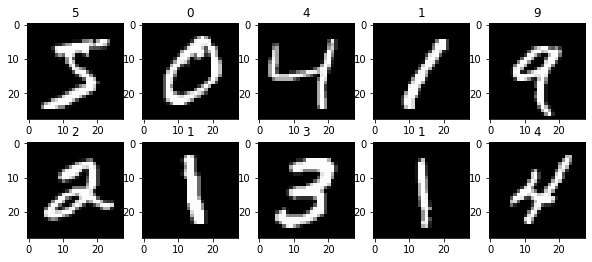

MNIST DIGITS, one of the more common data-set used both computer machine learning and other image process fields. It is simply a set of squared images (28×28) with multiple various handwritten digit numbers, ranging from 0 to 9. See the following image.

Here I will go through the common use case in machine learning, by using a neuron network to perform image recognition. We will try both a forward neuron network, as well a convoluted neuron network. See which performs the best, followed by a quick summarized explanation of their respective performance. However, will not cover the details, but rather the essence.

Both the forward and convolution network is based on the python notebook.

Forward Neuron Network

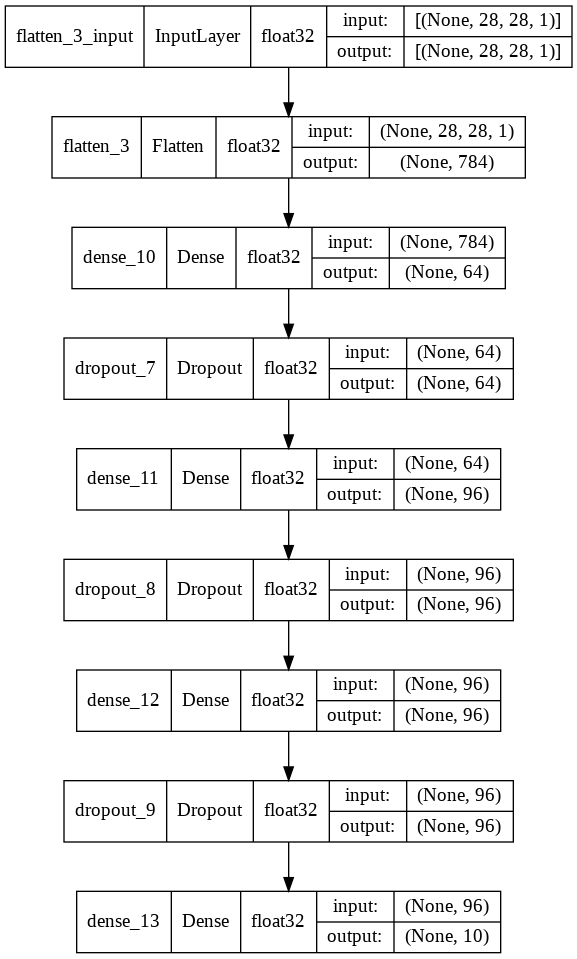

Forward neuron networks are the most basic of them all. It is simply a network of layers, each layer is processed at a time, taking the sum of all previous nodes, each multiplied by some weight that is adjusted over time, by the backpropagation process. Additionally, a random bias can be assigned to the final value as well. Last but not least, the final value is passed through an activation function, That is very important to determine if the neuron fired and its magnitude.

Finally, the neuron network was connected to a final layer of 10 neurons, representing each possible numerical value. See the following image for each layer used for the MNIST digit forward neuron network.

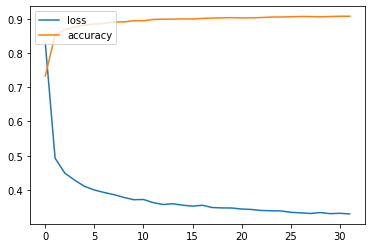

The following images are the history of the training of the forward neuron network. The X-axis represents the epoch. The accuracy is how high the probability it will guess the correct value for the respective image and its digital value. Whereas the loss is the cost function, which is used to determine if the model is learning or not, that which converges to 0 since that means it knows everything.

Convolution Network

Next is the convolution network. In this case, it is not a fully convolutional network, rather, a combination of a convolution network and a forward neuron work were combined. First, the convolution network layers will take the input image, extract features that it can detect, like horizontal lines, diagonal lines, vertical lines, or whatever feature associated with the image that it finds to be useful.

Each convolution layer will find a more abstract pattern, for instance, lines can create letters, a sequence of letters creates sentences, a sequence of sequence creates a paragraph.

Next and lastly, the list of features in the final layer of the convolution layer was connected to a forward neuron network, where it will go through the whole forward neuron process. Where finally it be connected to output to 10 neurons that will give 10 output of probability for reach respective digit.

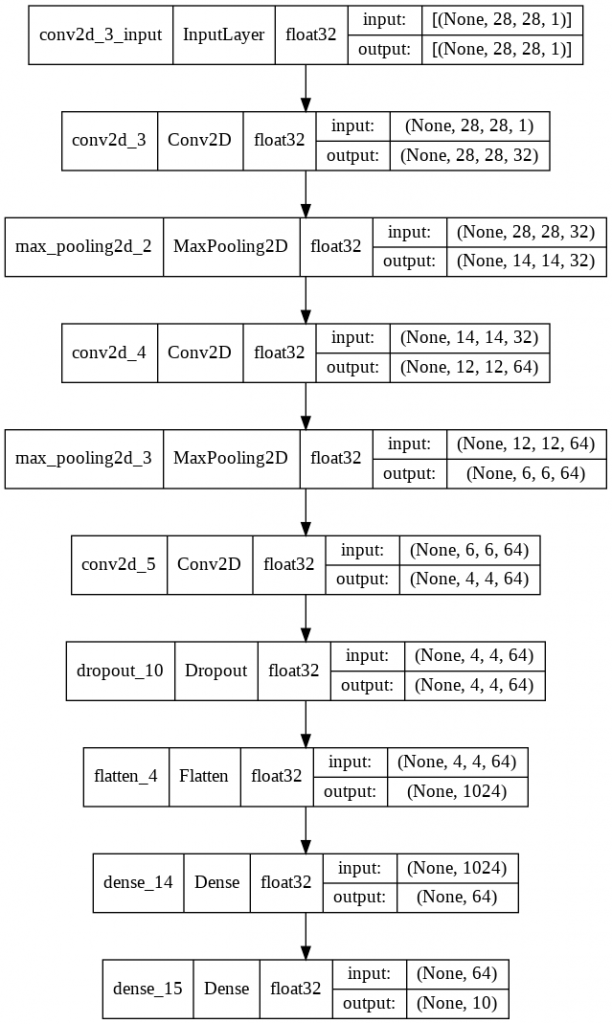

See the following image for each respective layer used for the convolutional network.

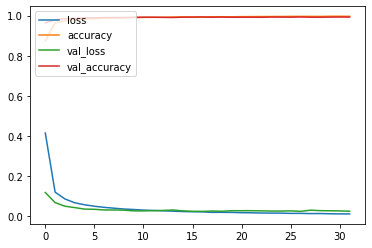

The training history of the convolution network is very quick to learn, that can be seen both on the cost function, as well the accuracy, after just a couple of epoch converge more or less.

Final

The result shows that the convolution network reached high accuracy than the forward neuron network, though the forward neuron network wasn’t all bad, reaching close to 90 percent probability. But despite it might suggest that a forward neuron network could be useful for image recognition, it, however, would not, since the MNIST digit dataset consists of very simple images, very simple image patterns, like line, curve, etc. Anything more complicated would fail very quickly.

Finally, it should be noted that there probably exists a better neuron network model. This is just a simple setup.

The neuron model can be downloaded from the following link or can be computed with the python notebook

Free/Open software developer, Linux user, Graphic C/C++ software developer, network & hardware enthusiast.